PL-SLAM

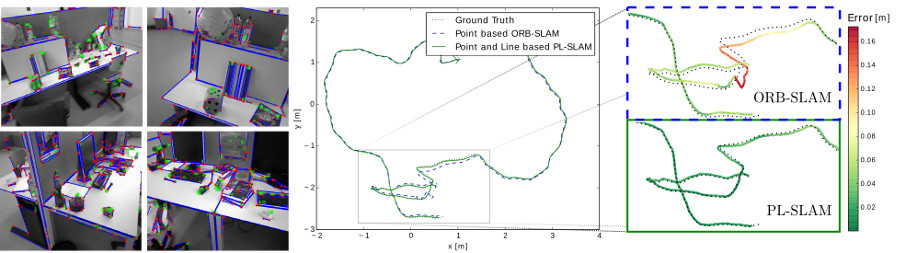

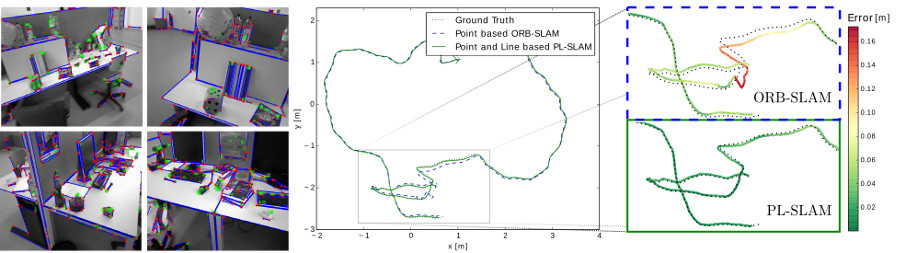

Low textured scenes are well known to be one of the main Achilles heels of geometric computer vision algorithms relying on point correspondences, and in particular for visual SLAM. Yet, there are many environments in which, despite being low textured, one can still reliably estimate line-based geometric primitives, for instance in city and indoor scenes, or in the so-called ``Manhattan worlds'', where structured edges are predominant. In this paper we propose a solution to handle these situations. Specifically, we build upon ORB-SLAM, presumably the current state-of-the-art solution both in terms of accuracy as efficiency, and extend its formulation to simultaneously handle both point and line correspondences. We propose a solution that can even work when most of the points are vanished out from the input images, and, interestingly it can be initialized from solely the detection of line correspondences in three consecutive frames. We thoroughly evaluate our approach and the new initialization strategy on the TUM RGB-D benchmark and demonstrate that the use of lines does not only improve the performance of the original ORB-SLAM solution in poorly textured frames, but also systematically improves it in sequence frames combining points and lines, without compromising the efficiency.

The video shows an evaluation of PL-SLAM and the new initialization strategy

on a TUM RGB-D benchmark sequence. The sequence selected is the same as the

one used to generate Figure 1 of the paper.

Legend:

- Map: estimated camera position (green box), camera key frames

(blue boxes), point features (green points) and line features (red-blue endpoints)

- Current Frame: point features (green boxes) and line features (red-blue endpoints)

BibTex

@incollection{pumarola2019relative,

title={Relative Localization for Aerial Manipulation with PL-SLAM},

author={A. Pumarola and A. Vakhitov and A. Agudo and F. Moreno-Noguer and A. Sanfeliu},

booktitle={Aerial Robotic Manipulation},

pages={239--248},

year={2019},

publisher={Springer}

}

@inproceedings{pumarola2017plslam,

title={{PL-SLAM: Real-Time Monocular Visual SLAM with Points and Lines}},

author={A. Pumarola and A. Vakhitov and A. Agudo and A. Sanfeliu and F. Moreno-Noguer},

booktitle={International Conference in Robotics and Automation},

year={2017}

}

Publications

2017

-

- PL-SLAM: Real-Time Monocular Visual SLAM with Points and Lines

- A. Pumarola and A. Vakhitov and A. Agudo and A. Sanfeliu and F. Moreno-Noguer

- IEEE International Conference on Robotics and Automation (ICRA), 2017.

-

Low textured scenes are well known to be one of the main Achilles heels of geometric computer vision algorithms relying on point correspondences, and in particular for visual SLAM. Yet, there are many environments in which, despite being low textured, one can still reliably estimate line-based geometric primitives, for instance in city and indoor scenes, or in the so-called ``Manhattan worlds'', where structured edges are predominant. In this paper we propose a solution to handle these situations. Specifically, we build upon ORB-SLAM, presumably the current state-of-the-art solution both in terms of accuracy as efficiency, and extend its formulation to simultaneously handle both point and line correspondences. We propose a solution that can even work when most of the points are vanished out from the input images, and, interestingly it can be initialized from solely the detection of line correspondences in three consecutive frames. We thoroughly evaluate our approach and the new initialization strategy on the TUM RGB-D benchmark and demonstrate that the use of lines does not only improve the performance of the original ORB-SLAM solution in poorly textured frames, but also systematically improves it in sequence frames combining points and lines, without compromising the efficiency.

@inproceedings{pumarola2017plslam,

title={{PL-SLAM: Real-Time Monocular Visual SLAM with Points and Lines}},

author={A. Pumarola and A. Vakhitov and A. Agudo and A. Sanfeliu and F. Moreno-Noguer},

booktitle={International Conference in Robotics and Automation},

year={2017}

}

Acknowledgments

This work has been partially supported by the Spanish Ministry of Science and Innovation under projects HuMoUR TIN2017-90086-R and ColRobTransp DPI2016-78957; by the European project AEROARMS (H2020-ICT-2014-1-644271); by a Google faculty award; and by the Spanish State Research Agency through the Mar\'ia de Maeztu Seal of Excellence to IRI MDM-2016-0656.